World University Rankings: Are They of Any Use?

World University Rankings are an interesting phenomenon. Some of their first editions were published around the early 2000s, and they seem to have become increasingly controversial over the years.

The rankings that are named to have the largest audiences and possibly also the largest impact would be the QS World University Rankings, the Times Higher Education (THE) World University Rankings, the Academic Ranking of World Universities (ARWU) and the US News & World Report Global Ranking.

I stumbled upon them about two years ago and did not really think much of it at first. At a later point, out of curiosity, I looked up the placements of universities that first came to my mind: Swiss universities in my surroundings, universities my friends have told me about, internationally reputable ones one reads about like Harvard, and so forth.

Some placements surprised me, for example how ETH Zurich was ranked as high as #6 in QS. Most of the other placements were in line with my expectations: Elite UK universities like Oxbridge and Ivy League institutions filled the Top 20 in pretty much all of the tables. Naturally, I myself am also guilty of being fascinated with the reputation and history (and really mostly the architectural beauty) of institutions such as the University of Oxford.

All of this left me wondering: What can one really make of these rankings, and more importantly, what are they based on?

The Data and the Methodology

A first important question to answer might be how many universities there even are in the world. Turns out that finding this information is not exactly straightforward: Answers range from 9696 to 13800 to 19800 to 30586 up to 51633. Unfortunately, many of the sources behind these numbers don’t properly disclose how they arrived at their result. To make it short, the data from the WHED seems the most well-founded to me, so I’ll go with the number of 19800 for the sake of this next point.

Now, all of the mentioned rankings look at a pool of roughly 1500 to 2000 universities and eventually list a “Top 1000”, some going a bit further and listing a “Top 1500”. Realistically, what people really care about is the “Top 100” or “Top 200”, because who wants to scroll through a list of 1500 universities? Right, nobody. Either way, this raises a first big questionmark: How do you narrow down roughly 20’000 universities—among them some that were only recently founded (e.g. the University of Lucerne, founded in 2000)— to the “best” 0.005% (Top 100) respectively 0.08% (Top 1500), not even giving the utmost majority a thorough look before deciding who makes the cut?

The most commonly used ranking indicators provide a first answer to this question. Here they are:

- Number of publications

- Number of citations (e.g. within the past 5 years)

- Reputation in higher education and/or among employers (assessed via large surveys, sometimes including over 100'000 individuals)

- Faculty/student ratio

- Percentage of international students and faculty

- Income (focus varies; may be institutional, research or industry income, or all three)

It is important to note that not all rankings include all of the listed indicators — some of them use unique ones that the others don’t use. The ARWU for example takes the number of alumni and staff winning Nobel Prizes/Fields Medals into account, while none of the others do so.

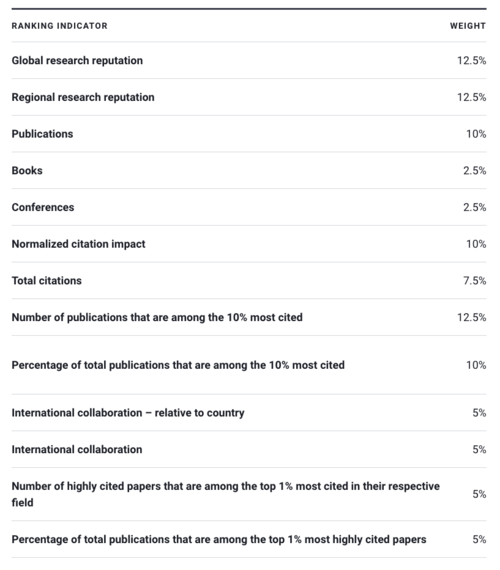

Furthermore, the amount of emphasis placed on each of the indicators also varies between rankings. If you’re interested in reading the details for each and every one of them, you’ll find all of the information available on the respective websites (e.g., THE has an extensive PDF file covering their methodology). The US News & World Report provides a more or less compact overview that fits on a single screenshot:

Criticism

So far, not all of what I mentioned explicitly speaks against these rankings – there may be some variation in methodology, and the actual rankings do only include a fraction of the universities that are available worldwide, but isn’t that the entire point?

First of all, I do think it’s notable that these rankings are (relatively) transparent with their methodology, enabling the readers to decide for themselves what to make of the information available. BUT: How many high school graduates will bother to check up on the methodology if the ranking’s websites put out statements like “… Our flagship analysis, the THE World University Rankings, is the definitive list of the top universities globally”? Not too many, I would imagine.

Thus, as with most attempts to measure something that is inherently connected to personal opinions and biases, determining the quality of a university is hardly an objective process, and consequently these rankings should not be confused with and/or sold as facts. On the other hand, I would also not want to label them as completely useless or inaccurate — I simply think it has to be made clear that they need to be taken with a grain (or maybe rather a teacup) of salt.

I want to further include a few points that were brought up in a news article by Elizabeth Gadd published in Nature World View :

… [The rankings] favour publications in English, and institutions that did well in past rankings. So, older, wealthier organizations in Europe and North America consistently top the charts. … … These league tables, produced by the Academic Ranking of World Universities (ARWU) and the Times Higher Education World University Ranking (THE WUR) and others, determine eligibility for scholarships and other income, and sway where scholars decide to work and study. Governments devise policies and divert funds to help institutions in their countries claw up these rankings. Researchers at many institutions, such as mine, miss out on opportunities owing to their placing. …

What I find interesting here is that you could probably name any one university that isn’t entirely falling apart to be “Top 200” or “Top 100”, and as more and more excellent students and faculty are drawn towards it due to its reputation, the number of outstanding achievements will continually increase, incoming funding and grants will increase over time, and so on — the label that originally might have been unfounded will most likely end up holding true.

I’m sure you yourself can think of many more points that could be brought up. However, instead of listing more criticisms, I would like to end this by mentioning some projects that attempt to give all of these rankings a positive spin.

The Future (?)

One might wonder whether there is a way to create a better version of these rankings, or if it would be better for them to be abolished for good. There are three organizations that pick up on this question (which were also linked in the Nature article):

The Leiden Ranking: A ranking by Leiden University where “it is up to you to select the indicator that you wish to use to rank universities”. There are also a few interesting additions like the the map view which provides a geographical perspective. I have not yet had a thorough look at it, but if you’re interested in checking for yourself, the ten principles for responsible use of university rankings seem like a good start.

INORMS and the European Commission: The International Network of Research Management Societies (INORMS) put together a working group to come up with criteria for responsible conduct and use of university rankings. Furthermore, the European Commission very recently (September 2020) put out a publication named Towards a 2030 vision on the future of universities in the field of R&I in Europe (a 200-page document — needless to say, I have not read much of it yet), which conducted case studies on some of the most popular university rankings, e.g. the THE ranking in chapter 4.4.4. If I do find anything of interest in there, I will make sure to bring it up in one of my next writings.

In conclusion, fortunately, a handful of (influential) organizations are now putting a good amount of resources into this matter. This only further underlines the importance. Naturally, universities are also facing many other challenges, not least because of the current situation — I suppose it will be interesting to see how it all fares out.